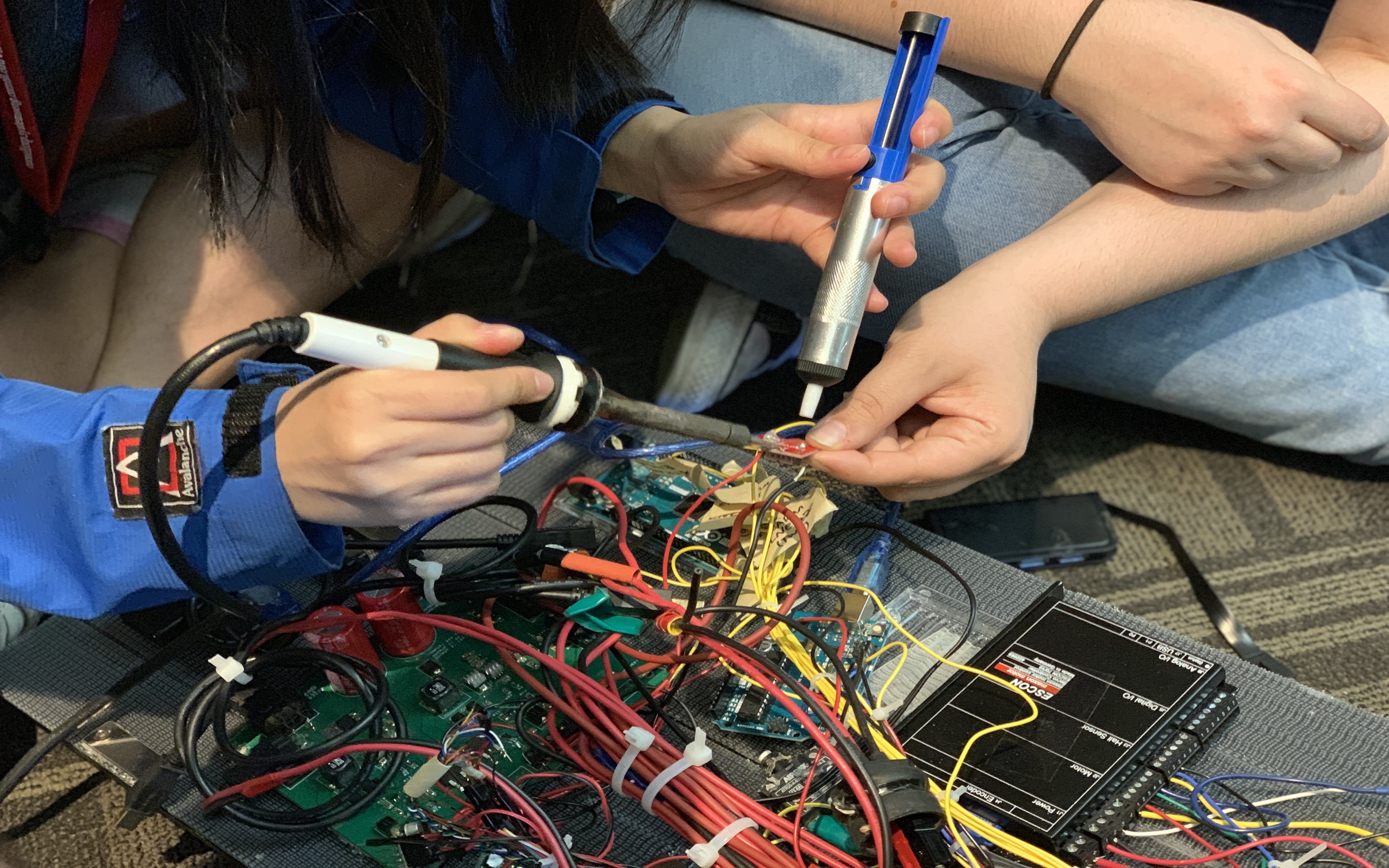

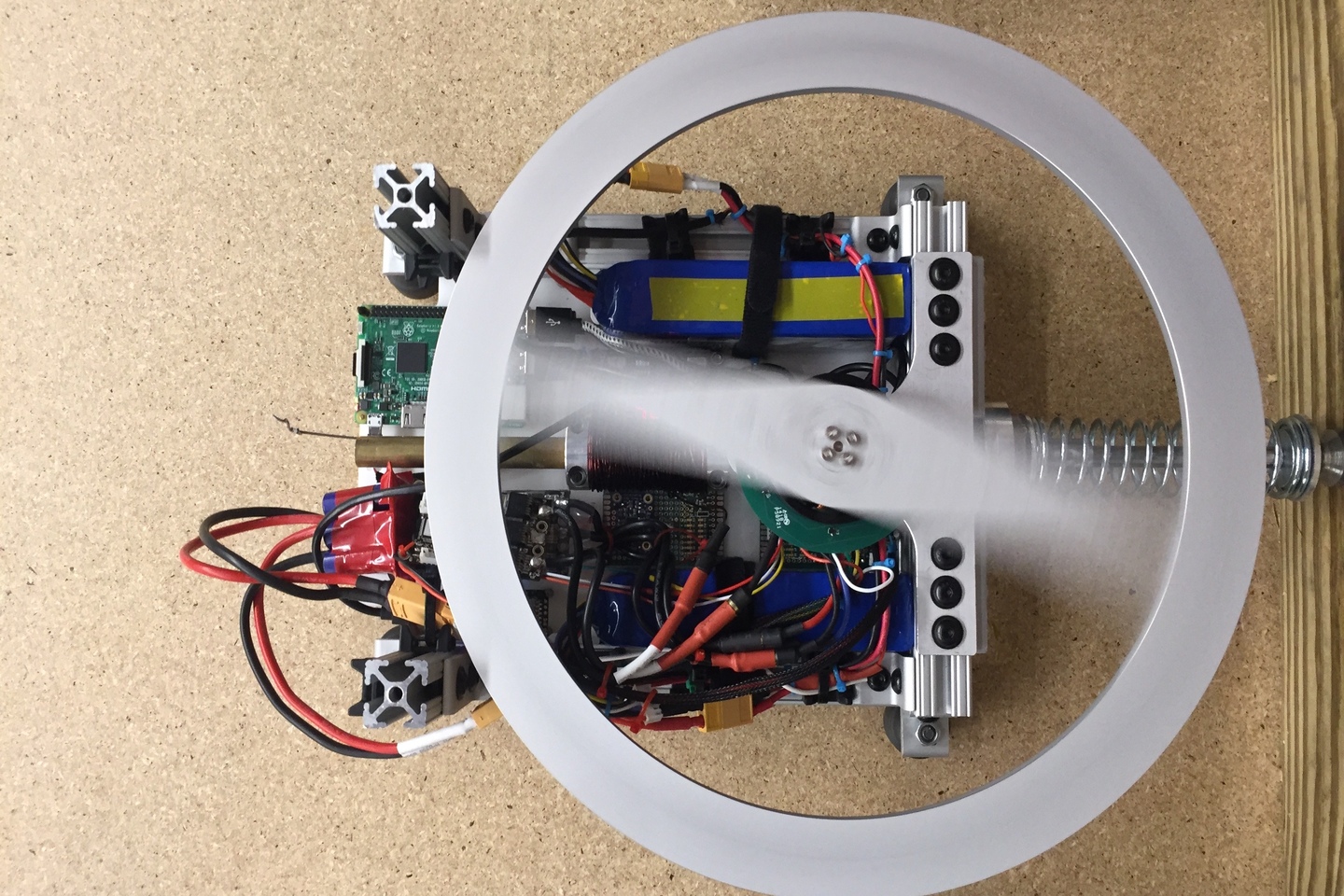

Above: Image of our mini pod-racer simulator at the National Maker Faire in 2017

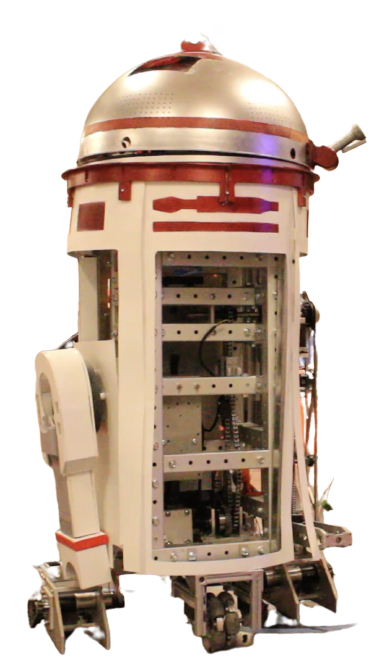

C1C0

MechE Leads: Zack Ginesin, Keegan Lewick

ECE Lead: Laurence Lai

CS Lead: Mohammad Khan

The original R2D2 project focused upon creating a semi-autonomous lab assistant that could navigate and map out its surrounding environment. We have since renamed that project to C1C0 and given it the ability to interact with its surroundings. This allows the droid to complete tasks such as opening a door, recognizing and greeting individual people, and even firing a nerf dart at a target! To generate excitement and interest in robotics and engineering, the team has advertised the C1C0 project at various events like (Club Fairs, Science Fairs, whatever else you can think of), and are looking for more.

Facial Recognition

The goal is to recognize the faces of Cornell Cup members and then check them in for attendance. We are currently working on having faster transmission between the programs, learning faces on the fly, and comparing results against the members on the website.

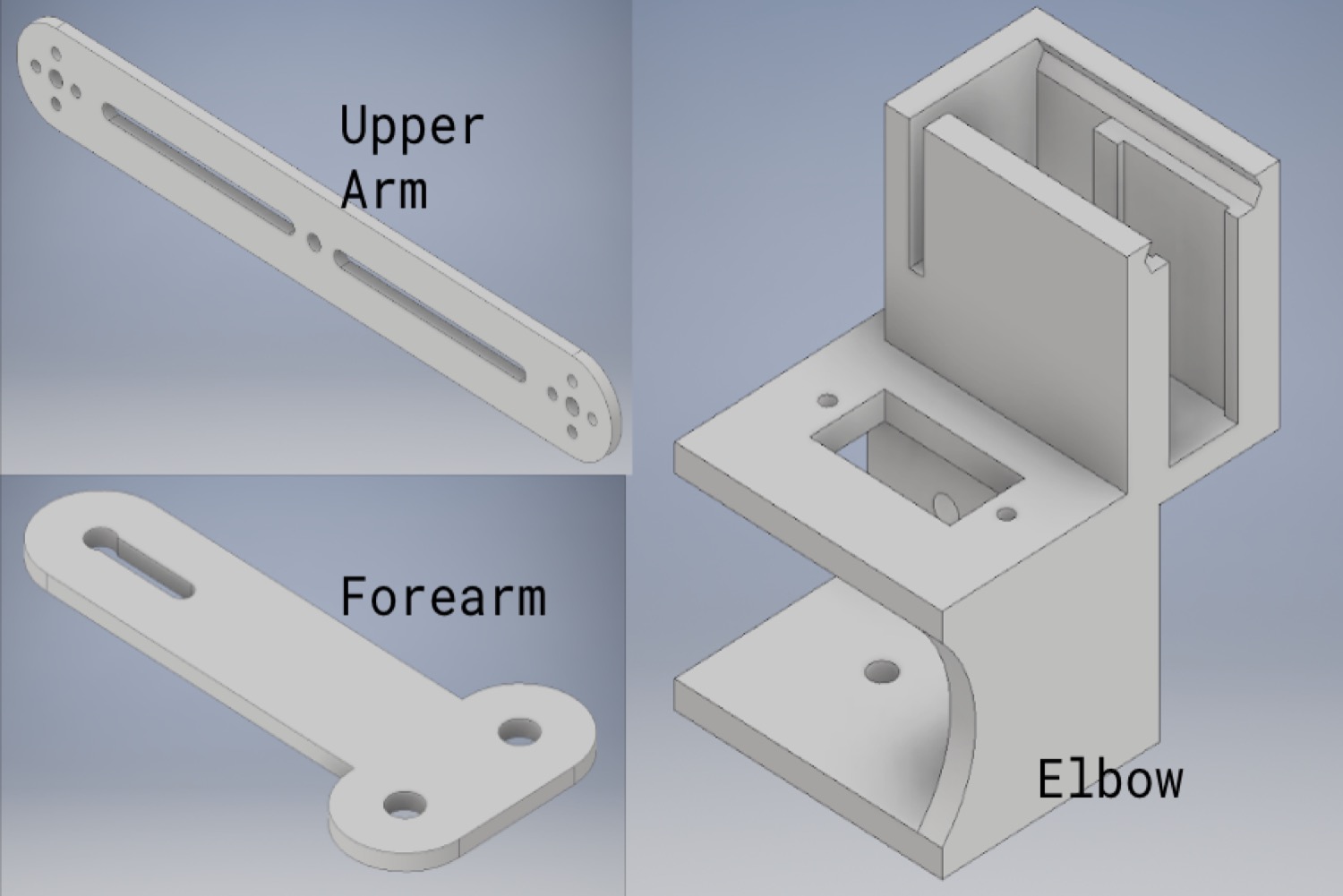

Strong Arm

We are designing a durable robotic arm intended for performing operations requiring more strength for C1C0. This arm will be used for more higher-force operations, such as holding open doors and picking up heavy objects, and can produce upwards of 25 pounds of force.

Object Detection

The goal of this project is to be able to classify and locate objects around the lab from an image capture and successfully grasp target objects based on user commands. This project works closely with computer vision and machine learning, and implements inverse kinematics to carry out C1C0’s precision arm tasks.

Speech & Sentiment Analysis

The purpose of this project is to make C1C0 able to react to sentiment in the user's speech. C1C0 reacts either negatively, by playing a sad noise on its speaker, or positively, by playing a happy sound on its speaker, based on the sentiment of the user's speech.

Head

The team is working on integrating a canopy to hold a Baby Yoda, a lens aperture winking mechanism, and the head structure. We are also designing the new head to better hold the internal and external features on C1C0.

Precision Arm

We are designing a high precision robotic arm for use on C1C0. This arm has 6 degrees of freedom and will allow C1C0 to perform various tasks, including waving hello, opening a door, and picking up a pen.

Chatbot

The goal of Chatbot is to enable C1C0 to respond intelligently to a spectrum of different questions and commands. This project involves the difficult task of filtering speech from noise and natural language processing, and is the central hub for communication between the user and C1C0 and its other computing processes.

Locomotion

We are using serial ports to map C1C0's motors to buttons on an XBox Controller, enabling it to do complex motions without skidding out of control.

Path Planning

The team is working on enabling C1C0 to traverse across different terrains and avoid obstacles. This project requires the integration of many different sensors, including LIDAR, Indoor GPS, Gyroscope, and Indoor GPS, translating data into algorithms and optimizing for speed.

XRP

MechE Leads: Keegan Lewick, Zack Ginesin

ECE Lead: Anne Liu, Amy Lee

CS Lead: Sanjana Nandi

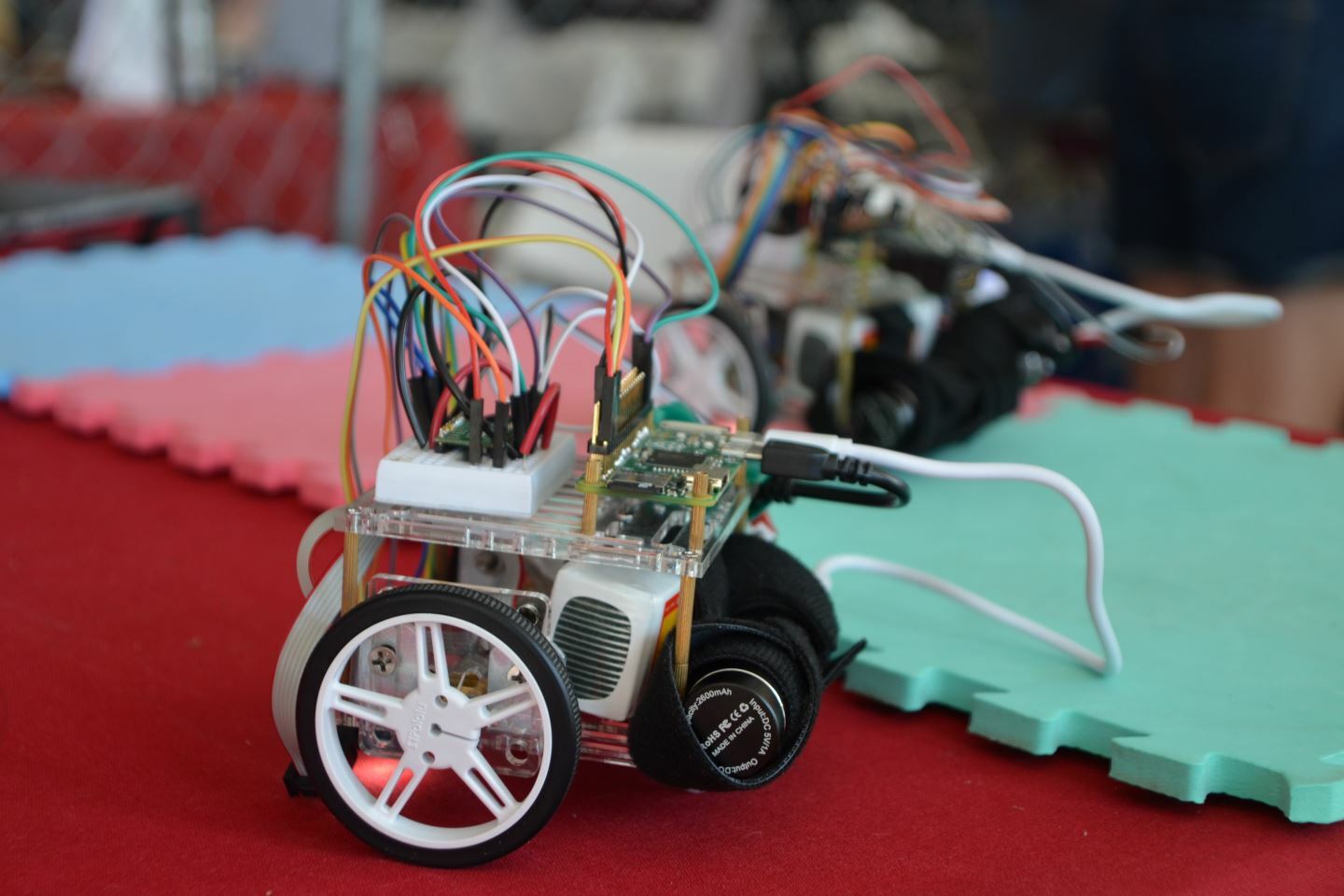

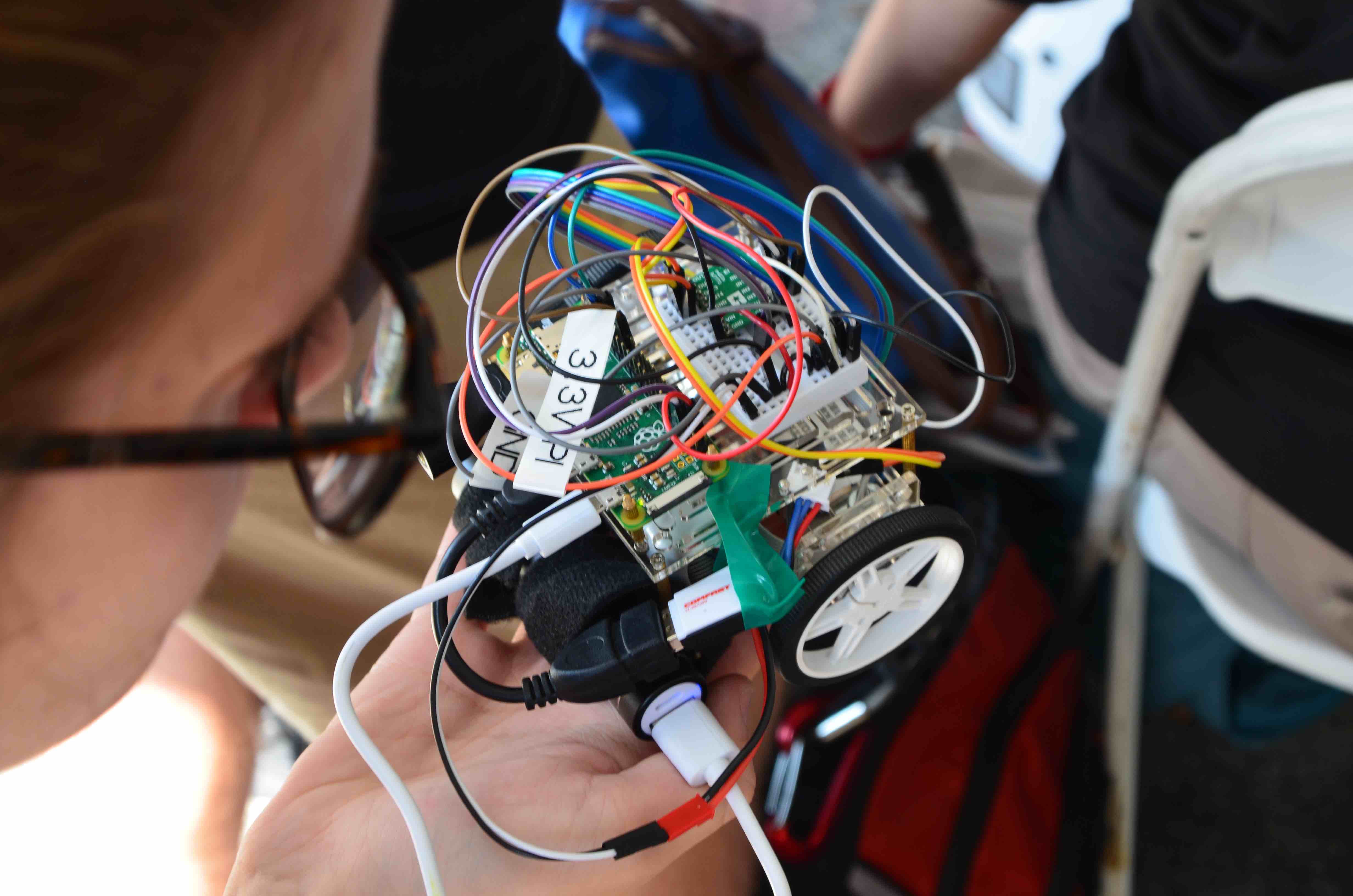

The XRP robot is a low-cost, easily accessible education platform design to enable everyone from -12 students exploring their first steps into a possible STEM career to graduate students aiming to take advantage of its open source / open hardware community to study advance topics in embedded and internet of things systems, computer vision, controls, AI and much more. Created in a collaboration with FIRST, the world’s most prominent K-12 STEM competition of over 1,000,000 activite partipants worldwide, along with Sparkfun, Deka, Raspberry Pi, STMicroelectroncs, DigiKey, WPI, and of course Cornell, the XRP is poised to make a significant impact in empowering students and teachers to meet the growing need for STEM savvy citizens in our society.

In fact, the first test flight of that Astrobee based XRP happened in November 2024 with award winning high school teacher Aymette Jorge, and featured the team’s XRP emotion system. The next test flight will occur in May or November 2025 and thanks to the generosity of the Baran Agency, the complete Astrobee based XRP will be launching into space in 2026 with Cornell Cup alumni, Renee Frohnert!

The team also showcases their latest work at the FIRST National Championship every year to an audience of 50,000 attendees in Houston Texas alongside companies and organizations like Walt Disney Imagineering, NASA, Boeing, Qualcomm, BAE, Deka and many more! Additionally, the XRP is already used in over 190 countries, including being in every classroom in the state of New Hampshire! Stay tuned for even more exciting developments coming soon!

Assembly, modularity, and programming

- Classic line-follower, maze solvers, and sumo event versions, incorporating popular robotic building materials like Legos.

- Race cars controlled in "first person" through an on-board camera stream.

- Agricultural versions for monitoring and tending to plant health and growth.

- A space-travel version, developed with assistance from NASA's Astrobee team and Lockheed Martin RMS.

Buddybot

BuddyBot is a mini-project, which increases Minibot’s usability and acts as a miniature companion robot to our R2 robot. BuddyBot is not meant to be approached as a project that will be licensed for educational purposes, but rather as a project that tests the limits of the Minibot technology by incorporating more advanced, novel features and technologies that novices may not be able to use freely. We hope to inspire young inventors to be able to participate in robotics through Buddybot.

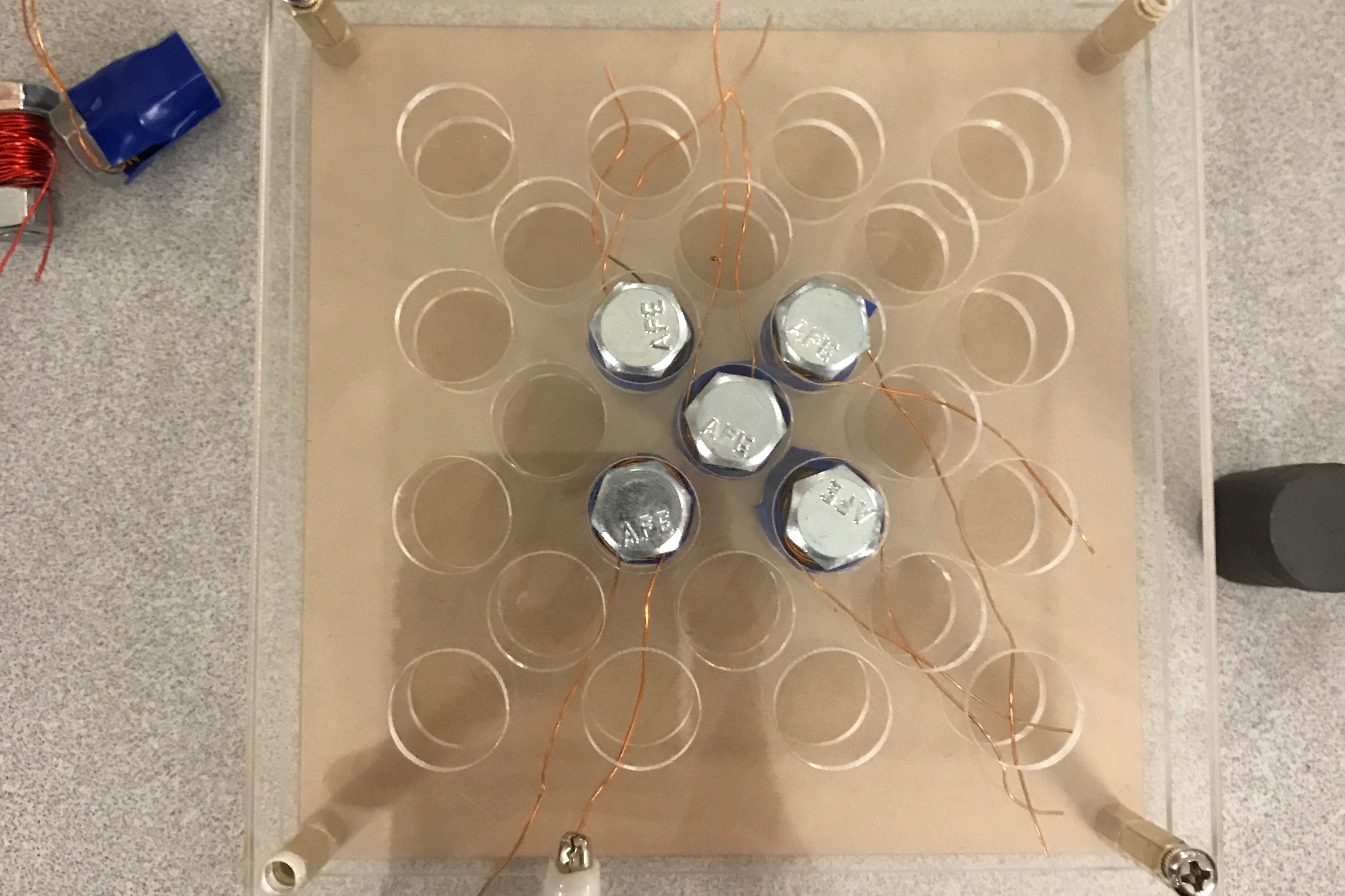

Vision

An overhead vision positioning system, giving up to a cm accuracy in the position and orientation of the robot using an overhead vision system

Impact on young students

A means for younger students to create there own custom Blockly blocks as they take their first steps in learning Python. A way for even pre-school students to program the robot with physical blocks, cards, or “signposts”.

LCD Touchscreen

An emotion system and on-board LCD screen that helps students feel an interpersonal connection and has even been reviewed by members of Walt Disney Imagineering

Sensors

Support for a variety of sensors such as ultrasonics, IR, color sensors, RFID scanners, pressure sensors and more than can work with the Sparkfun Qwiic system

General Improvements

A new awning and SnapBolt TM attachement system that allows for greater modularity and flexibility and more!...

B.O.B.

ECE Lead: Vidhi Srivastava

B.O.B., or Bipedal Operational Bot, is our take on a VR driven bot. Using a combination of reinforcement learning for stability and a body designed to move as closely to a natural human as possible, we aim to create an immersive experience that allows the driver to look and move from the perspective of a quarter scale model. Thanks to 3D printing, B.O.B. is lightweight yet durable, with modular components that allow for adaptability as the project evolves and for quick replacement if B.O.B. is injured. The integration of virtual reality offers remote control through a first-person perspective, allowing users to feel as though they’re truly in the robot’s environment, navigating and interacting without ever putting themselves at risk. Whether maneuvering through dangerous terrains or exploring unreachable spaces, B.O.B. provides an intuitive, lifelike experience unlike any other. Working together with outside teams, we hope to push our research and development sweetheart into the world and make a change!

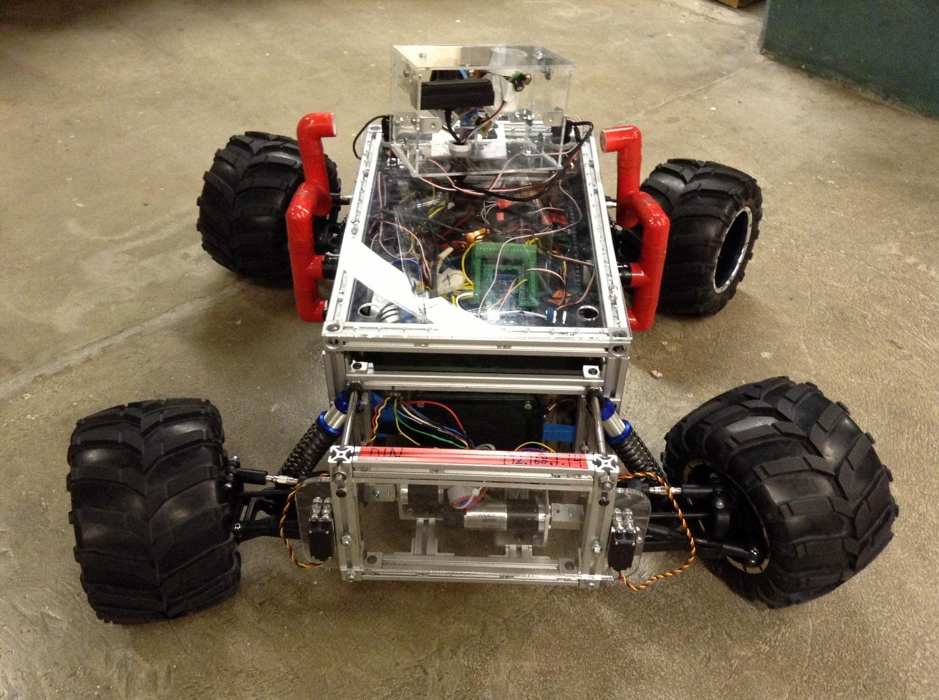

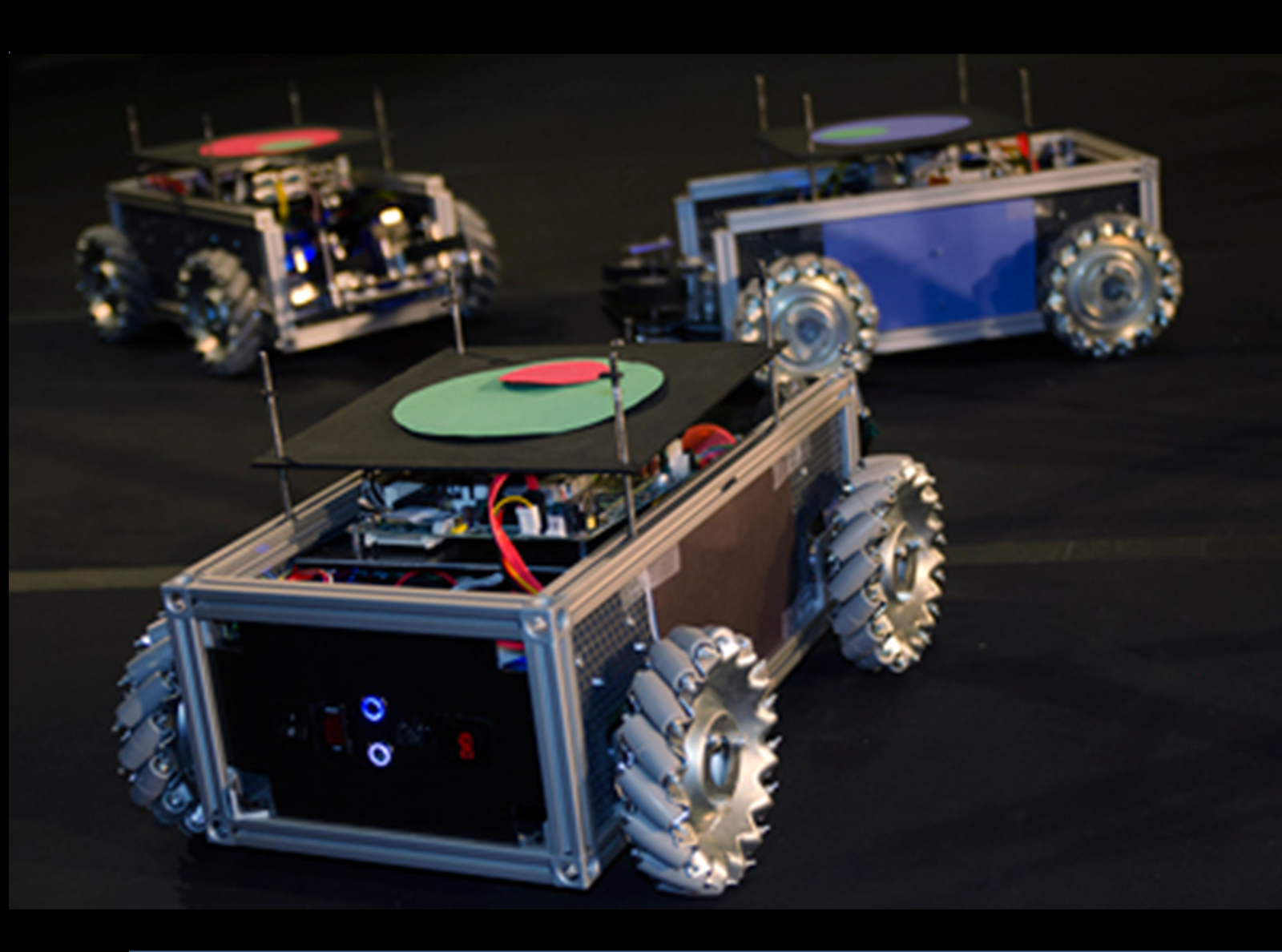

Past Projects

Over the years Cornell Cup Robotics has successfully created numerous projects. These projects range from a humanoid robot that is able to play RockBand with 98% accuracy, to an autonomous omni-directional rover named DuneBot, and even functional droids inspired by C3PO.